With most of the votes counted, Stochastic Democracy analyzes how well it's model's predictions did vs Nate Silver's FiveThirtyEight, as well as drawing lessons for future election forecasting models.

See below the fold for details, or click through the site to see an interactive web applet.

StochasticDemocracy.Blogspot.com

**************Cross-Posted at StochasticDemocracy**********

With most of the results in, it's now possible to make a comprehensive comparison with Nate's FiveThirtyEight. (See my pre-election comparison of our methodologies here)

Summary:

Stochastic Democracy did a better job predicting the presidential race, FiveThirtyEight did a better job in the Senate, and both sides performed equally well predicting the popular vote.

Electoral College:

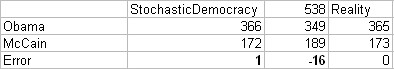

Our final predictions:

But because of the winner-take-all system, this is not a good way to gauge accuracy. Instead, it is a better idea to look at how we did at predicting the margins in individual states:

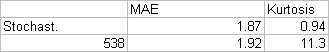

In terms of mean absolute error, my model had a slightly lower mean absolute error than his. But the Kurtosis of his prediction residuals is much higher than mine.

That means that most of the time, his predictions were slightly more accurate then mine. But when his predictions were off, they were really really off.

If I had to pick a culprit for this, I would say this is a consequence of his extensive and much celebrated use of regression. The idea behind regression here, that it's possible to predict a state's political orientation by simply applying a mathematical formula to it's demographics, usually works depressingly well.

But when it fails, it fails badly(See Indiana). And while it's possible to correct for this by using fat tailed error distributions, it doesn't seem that Nate did this.

But, everyone has their own metrics for this sort of thing, so for those who want to see the raw data, click here.

Senate:

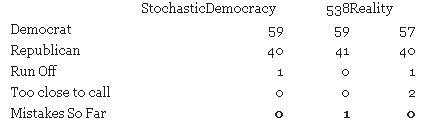

Nate and I basically made the same senate predictions, with one exception: Georgia. I predicted that a run-off was going to be called in Georgia, while Nate predicted that the Republicans were favorites to win the seat outright. Luckily, I made the right call there.

That aside, we made the same mistakes, in Minnesota and Alaska. While neither of races have been called yet, the Republican canidate is currently ahead in both states.

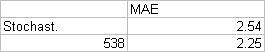

But it's uninformative to just see if we correctly predicted the winner in the state. By looking at margins, we see Nate's model edges out mine slightly.

It seems that when it comes to the Senate, Nate's model did a better job then mine. Why?

Nate accounted for house effects and pollster reliability, while I never had time to implement such things into my own model. While this turned out to be unimportant for the presidential race, this election has shown that as a whole, Senate polls suck. Of course, these effects can and will be implemented into my model easily, so we will see if the discrepancy continues with the next edition of my model.

On the other hand, the theoretical simplicity and resulting flexibility of my model allowed me to adapt to non-standard situations, like the Georgia run-off, better than Nate's model. I believe this flexibility will make my approach more popular in future elections.

For those who want to see the raw data, click here.

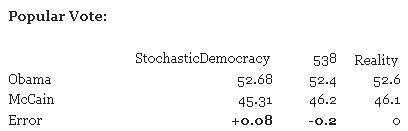

Popular Vote:

I did a better job predicting Obama's share of the vote, while Nate did a (much) better job predicting McCain's share of the vote.

I'm going to declare this one a tie, mainly since votes are still being counted, and we both did pretty well. Still, since Nate did such a better job predicting McCain's number, this should lean toward FiveThirtyEight.

[Note, new numbers since then indicate that my prediction was unambiguously better - 12/1/08]

Conclusion:

Altogether, the comparison is a bit of a wash up, and this election has provided both of us clear paths for how to improve in the future.

Still, I hope that everyone who enjoyed both our site's coverage of the election comes back for post-election analysis. Both of us have interesting work coming up.

Update: Pollster seemed to do very well also

Update: The popular vote numbers have changed, with the new results indicating Obama/McCain : 52.81%--45.80%

My prediction: Obama/McCain : 52.68/ 45.31

538's prediction:Obama/McCain: 52.4 / 46.2

While the different forecasts were very close, StochasticDemocracy unambiguously outperformed 538.

**************Cross-Posted at StochasticDemocracy**********